Success in today’s digital world hinges on understanding user behavior and optimizing user experience. A/B testing, also known as split testing or bucket testing, has emerged as a key player in this arena. Page builder platforms like Landingi have simplified the process of website testing, making it accessible for businesses to conduct these insightful experiments.

This article will delve into six real-life A/B testing examples and case studies that demonstrate the transformative power of split tests. We’ll explore how seemingly minor modifications can lead to significant improvements in conversion performance. We’ll also shed light on the unexpected results that can emerge from such experiments, proving that sometimes, the most surprising changes can yield the most effective outcomes. We’ll also discuss common pitfalls in bucket testing, including rushed processes, disregarding statistical significance, and making changes based on inconclusive results.

Join us as we navigate the world of A/B testing and discover its potential to optimize conversion rates and enhance the user experience.

What Is A/B Testing?

A/B testing is a method that helps make data-driven decisions by comparing different versions of a webpage or app to determine which performs better. It often involves testing multiple page variants (A/B/x testing) to optimize user experience and conversion rates.

In Adelina Karpenkova’s article titled “A/B Testing in Marketing: The Best Practices Revealed”, it is stated that testing is an integral component of the conversion rate optimization (CRO) process. The A/B test definition indicates investigating the responses of similar audiences to two versions of identical content, where the only difference lies in a single variable.

According to CXL’s article “5 Things We Learned from Analyzing 28,304 Experiments” by Dennis van der Heijden, such experimentation is the go-to test for most optimizers, with A/B tests totaling 97.5% of all experiments on their platform. Split tests have gained popularity due to their potential for repeatable success, increased revenues, and conversion rate optimization.

Thus, think of this testing as a marketing superpower that optimizes campaigns for maximum impact.

Where Is A/B Testing Used?

A/B testing application spans various sectors, proving to be a game-changer across industries such as:

- e-commerce

- SaaS

- publishing

- mobile apps

- email marketing

- social media

Creating a new version of an existing page and comparing its performance to the original enables businesses to boost conversion rates and optimize their digital presence based on actual user behavior and preferences. This can be particularly useful when managing multiple pages on a website.

What Are The Advantages of A/B Testing?

The advantages of A/B testing allow you to eliminate guesswork, make decisions based on concrete evidence, optimize your online presence, and ultimately achieve better results. The benefits of split tests can be grouped into 10 categories, which are as follows:

- Improved User Experience (UX): By testing different variations of your content, design, or features, you can identify which version provides a better user experience, leading to increased user satisfaction.

- Data-Driven Decisions: A/B testing provides concrete data on what works best for your audience, eliminating the need to rely on gut feelings or assumptions. This allows for more informed decisions.

- Increased Conversion Rates: By identifying which version of a webpage or feature leads to better conversion rates, you can optimize your sites to drive more sales, sign-ups, or any other desired actions.

- Reduced Bounce Rates: If users find your content or layout more engaging or easier to navigate, they’re less likely to leave the site quickly, reducing the bounce rate. For example, you may find that mobile users prefer a different layout or content presentation, leading to a decrease in bounce rates on mobile devices.

- Cost-Effective: Many A/B testing examples demonstrate how conducting such trials can prevent costly mistakes. By testing changes before fully implementing them, businesses can avoid investing in features or designs that don’t resonate with their audience.

- Risk Reduction: Launching major changes without testing can be risky. A/B testing allows you to test changes on a smaller audience first, reducing the potential negative impact of a full-scale rollout.

- Improved Content Engagement: Testing different headlines, images, or content layouts can help identify what keeps users engaged for longer periods.

- Better ROI: For businesses spending money on advertising or marketing campaigns, A/B testing can ensure that landing pages are optimized for conversion, leading to a better return on investment.

- Understanding Audience Preferences: Over time, consistent A/B testing can provide insights into audience preferences and behaviors, allowing for better-targeted content and marketing strategies.

- Continuous Improvement: Split testing fosters a culture of continuous improvement where businesses are always looking for ways to optimize and enhance their online presence.

CXL’s report “State of Conversion Optimization 2020” indicates that A/B testing is second to best conversion optimization strategy. Furthermore, as Smriti Chawla points out in VWO’s “CRO Industry Insights from Our In-App Survey Results”, statistically significant tests have been shown to increase conversion rates by an average of 49%.

That said, it is evident that clear hypotheses and well-executed bucket tests can unlock the full potential of your website or app and maximize your return on investment.

What Are The Disadvantages of A/B Testing?

The primary A/B testing’s disadvantage lies in the possibility of alienating users if tests are conducted too frequently or without proper preparation. Inadequate tests can lead to users encountering inconsistent experiences, which may cause dissatisfaction.

Moreover, common pitfalls in multivariate testing include rushed processes, disregarding statistical significance, and making changes based on inconclusive test results. Rushed processes may lead to poorly designed trials, while disregarding statistical significance can result in decisions based on random chance rather than actual effect. Additionally, making changes based on inconclusive results can cause confusion and lead to misleading data.

You must allow adequate time for each test, ensure a sufficient sample size for statistical significance, and refrain from making changes until the results are conclusive and statistically significant.

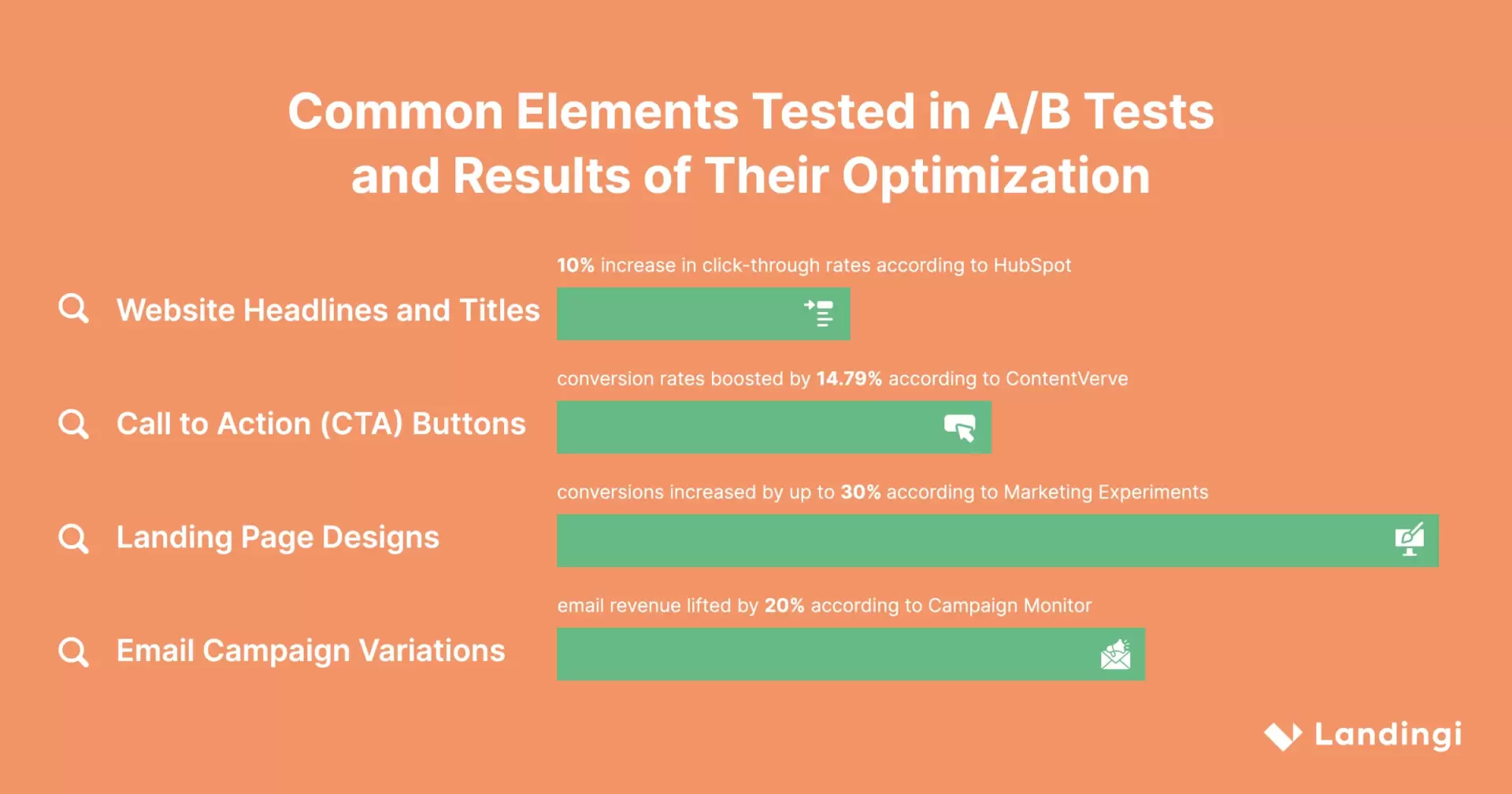

Common Elements Tested in A/B Tests

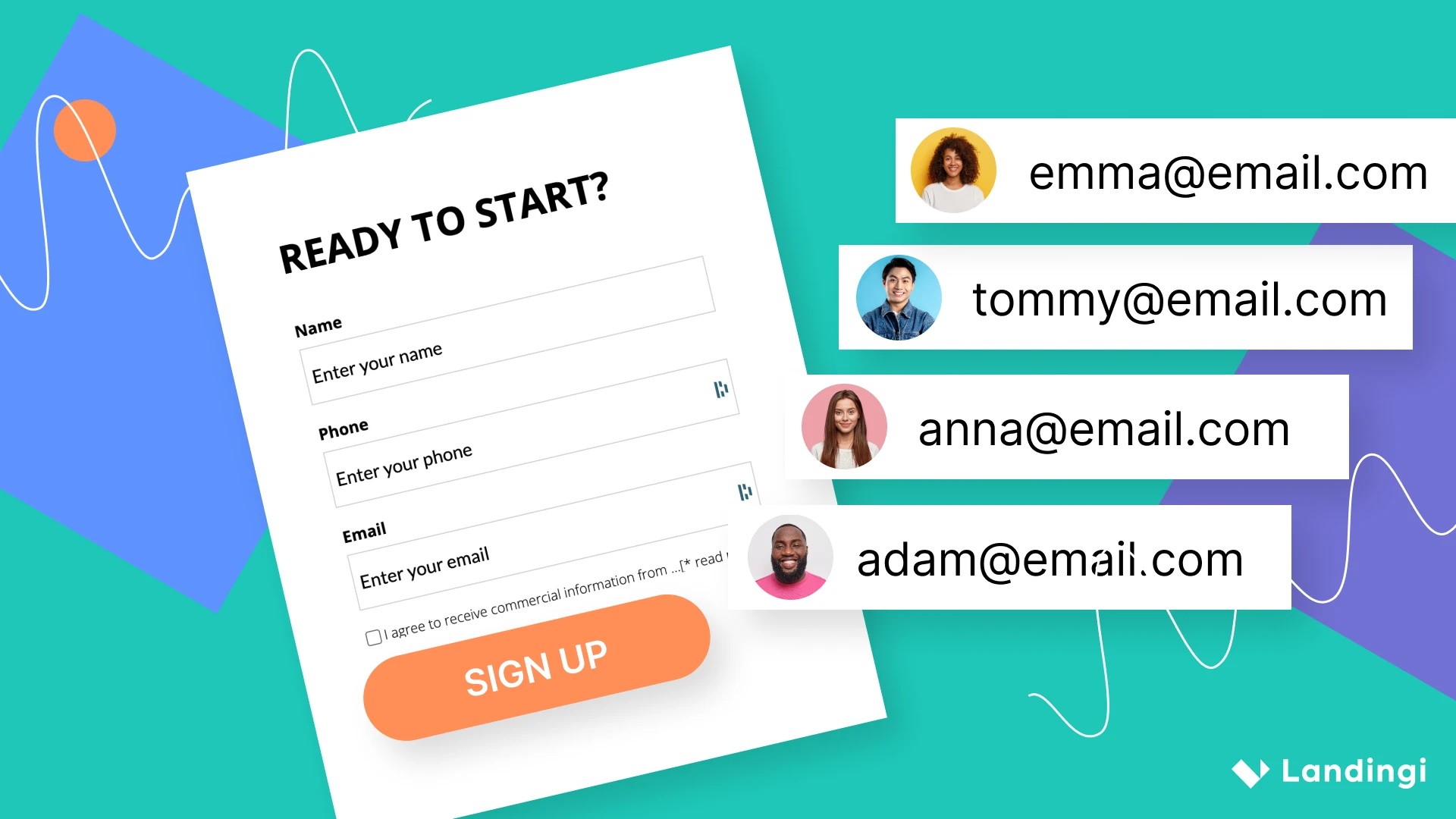

The most common elements tested in A/B tests include:

- Headlines and titles

- CTA (Call To Action) buttons

- Landing page designs

- Email campaign variations

There are also many examples of experimenting with signup forms, social proof sections, and ad copy. The key to a successful multivariate test is focusing on those elements that have the most significant impact on user behavior and conversion rate.

Let’s explore the most popular elements in more detail.

Website Headlines and Titles

A captivating headline can make all the difference in attracting and retaining website visitors. A/B testing allows you to experiment with different headings and titles to identify which ones resonate better with your target audience. By modifying factors such as wording, messaging, and style, you can optimize your copy for higher click-through rates, increased engagement, and improved website performance.

According to Hubspot, experimenting with headlines and titles can increase click-through-rates by up to 10%.

Call To Action (CTA) Buttons

CTA buttons play a crucial role in driving conversions. A/B tests enable you to compare different versions of CTA buttons to identify which one yields better results in terms of conversion rates. By altering the design, color, size, text, or position of the buttons, you can influence user behavior and enhance the efficacy of your call to action.

Buttons split testing is proven by ConvertVerve to boost conversion rates by 14.79%.

Landing Page Designs

A well-designed landing page can significantly improve user experience and conversion rates. In multivariate testing, commonly modified features include:

- Page layout

- Headlines

- Sub-headlines

- Body text

- Pricing

- Buttons

- Sign up flow

- Form length

- Graphic elements

Experimenting with different landing page variations is crucial for optimizing lead quality and conversion rates. By testing various designs, you can identify which elements resonate with your audience and drive desired actions, and which ones may be causing friction or confusion. This process of continuous improvement can significantly enhance the overall performance of a landing page.

Marketing Experiments have shown that optimizing landing page design increases conversions by up to 30%.

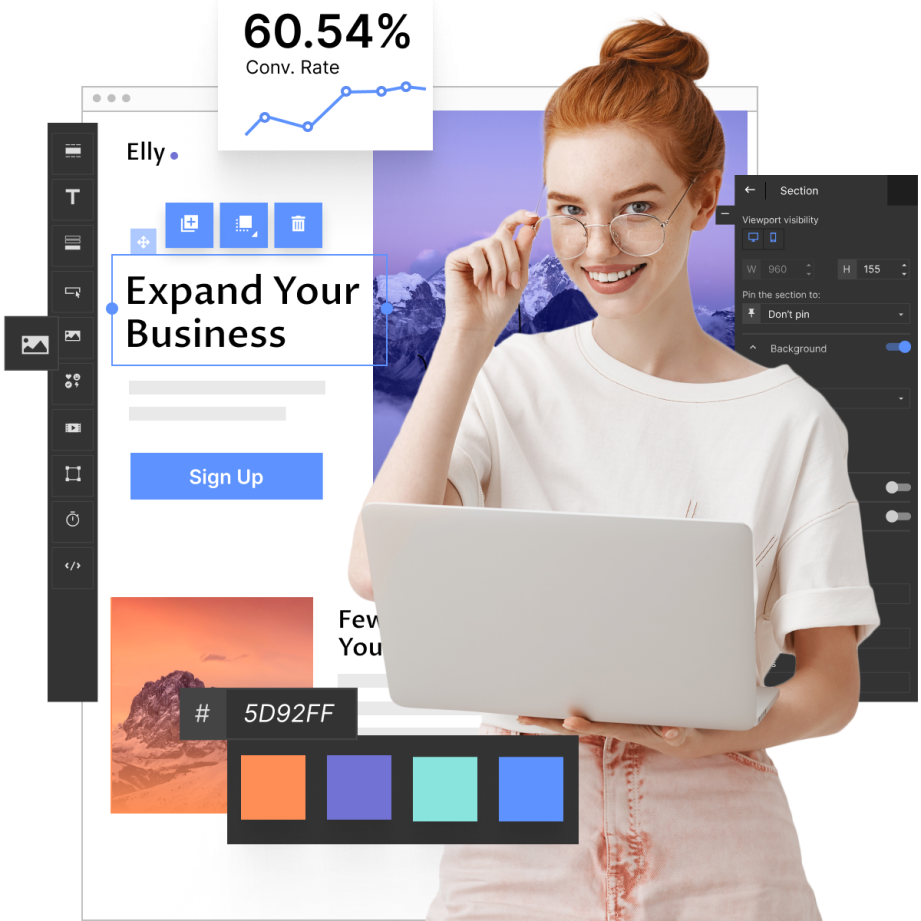

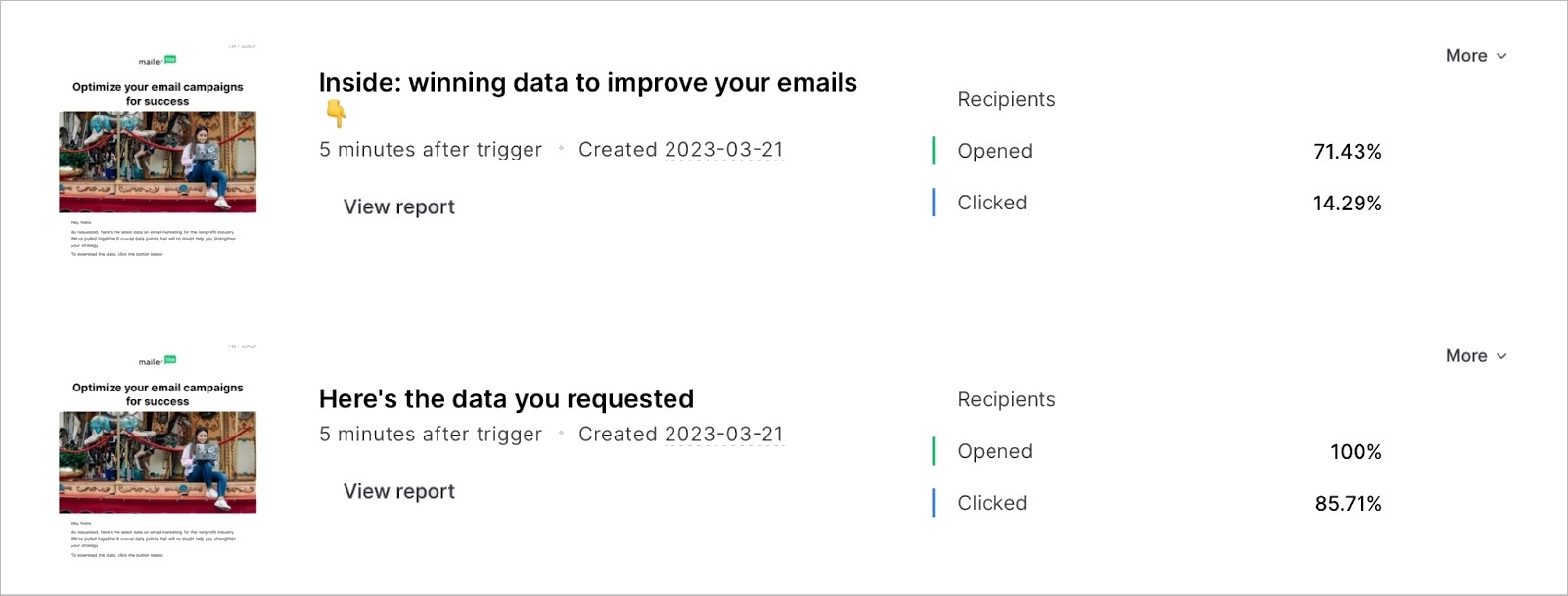

Landingi, a renowned landing page builder, offers a plethora of features that cater to this very need. With its intuitive interface and robust testing capabilities, Landingi makes it easy to create, duplicate, modify, test, and optimize landing pages.

Make your sections smartable and let go of mundane manual tasks with Smart Sections! An easy way to manage bulk changes.

Email Campaign Variations

Email campaigns are a powerful marketing tool, and A/B testing can help you optimize your campaigns for maximum impact. By creating and comparing multiple versions of your messages, you can identify which variations resonate better with your target audience and lead to higher open rates and click-through rates.

A/B testing is an invaluable tool that allows you to refine your email marketing strategy based on actual user behavior and preferences.

For example, consider a company selling handmade jewelry online. They could use split testing to find the most effective email newsletter subject line. They create two versions of the same email, differing only in the subject line. One email might have the subject line “New Collection: Handcrafted Jewelry Just For You,” and the other “Discover Your New Favorite Piece.” By sending these emails to different subsets of their audience, they can track which email has a higher open rate. The most successful subject line is then used for future newsletters, potentially leading to increased engagement and sales.

According to Campaign Monitor, email campaign variations can lift email revenue by 20%.

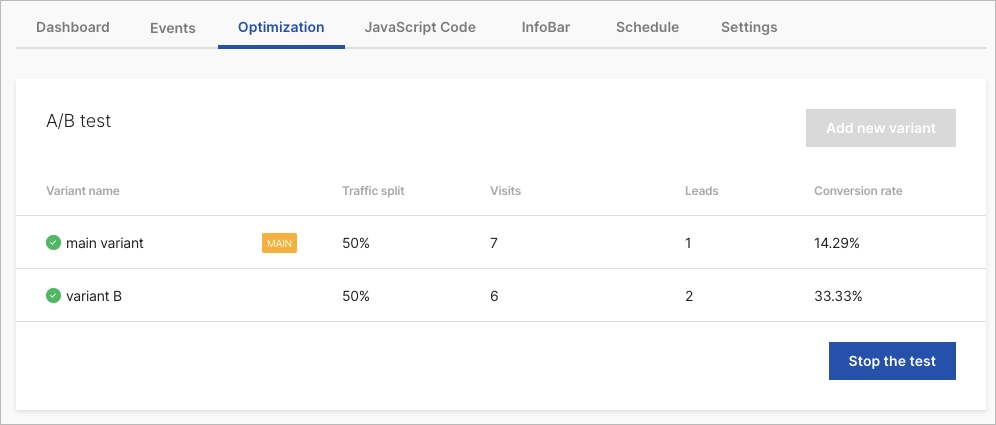

1. Landing Page A/B Testing Example

The first of our A/B testing examples naturally involves a landing page, given that marketers regularly test this type of website. ForestView, an agency based in Athens, Greece, embarked on an extensive A/B testing campaign to optimize their client’s landing page.

The Forestview team hypothesized that reducing the amount of scrolling required would help users find their preferred product more easily, leading to improved conversion rates for the form. To test this hypothesis, they conducted an A/B test with a redesigned landing page. The new design included multi-level filtering to allow users to find their preferred product dynamically and replaced a long list of products with carousels.

The A/B test was run for 14 days, with over 5,000 visitors split equally between the control and variation groups. The results showed that the variation outperformed the control, with the multi-level filtering and carousel design increasing the form conversion rate by 20.45% on mobile and 8.50% on desktop. Additionally, user engagement increased by 70.92%.

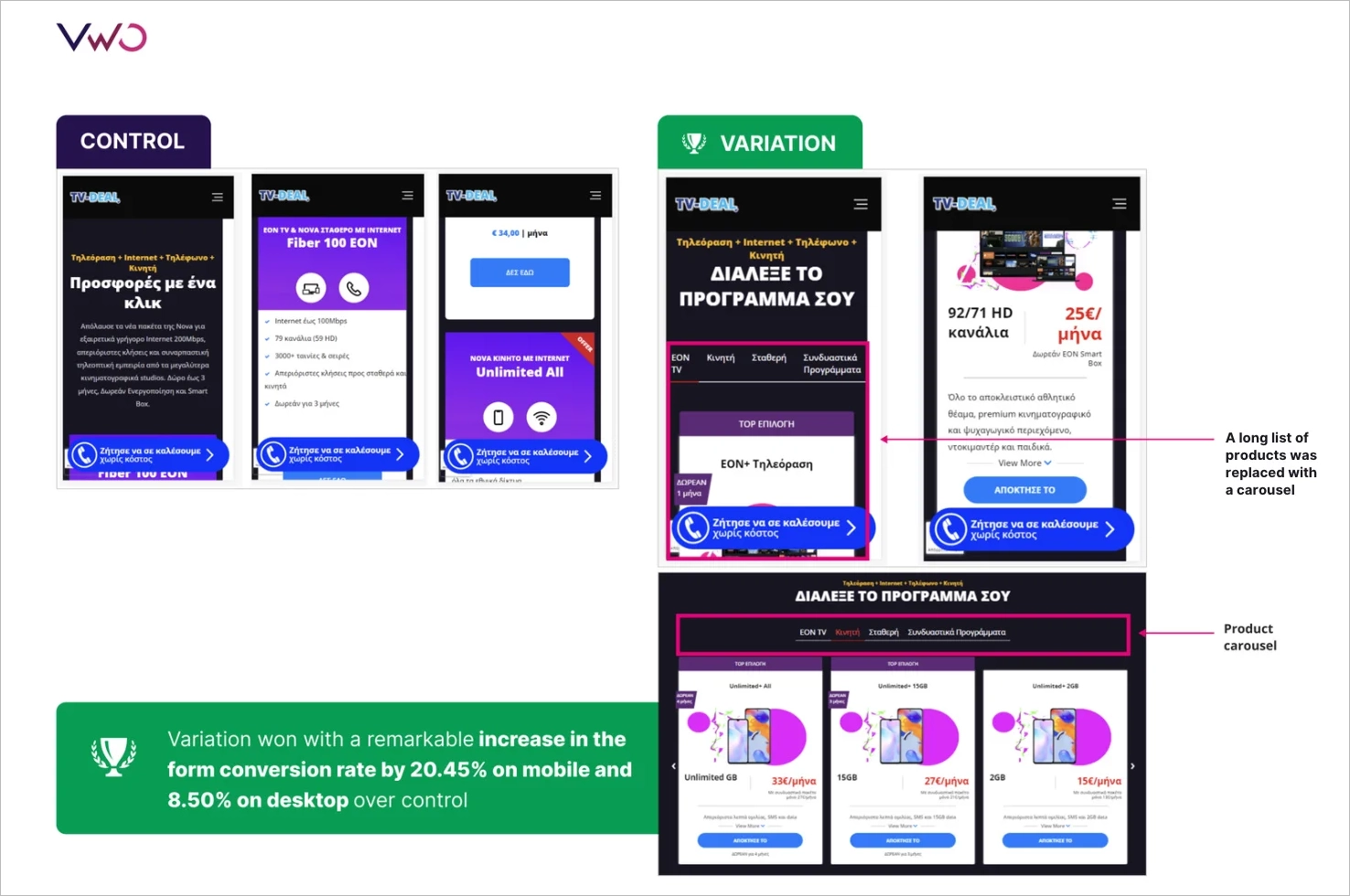

2. SaaS A/B Testing Example

In the SaaS industry, A/B testing stands out with its transformative power in optimizing website designs. An article by Basecamp’s Signal v. Noise provides insights into the concrete advantages of split testing on the Highrise marketing site.

The team had a hypothesis that a concise design, named the “Person Page,” would appeal more to users and result in higher conversion rates. To test their theory, they conducted an A/B test that compared the “Person Page” with the “Long Form” design. The results showed that the “Person Page” had a 47% increase in paid signups (102.5% increase compared to the original design). However, when they added extra information to the design, its effectiveness dropped by 22%.

In another experiment, the team analyzed the impact of customer photos on conversions. They discovered that large, cheerful customer images increased conversions, but the specific person featured in the picture did not matter.

The team spent several weeks conducting A/B tests, carefully analyzing user behavior and preferences. The main takeaway from their experiments was that bucket testing is a valuable tool that can debunk assumptions and reveal design elements that truly resonate with users.

3. Real Estate A/B Testing Example

The real estate industry, like many others, relies heavily on the effectiveness of its digital platforms. Real estate giants such as Zillow, Trulia, and StreetEasy understand the importance of continuously refining their user experience to cater to their audience’s needs. They achieve this by conducting A/B testing, as described in Anadea’s “How Effective A/B Testing Helps to Build a Cool Real Estate App” article.

StreetEasy, a New York City-based real estate platform, recognized the unique preferences of its audience. They conducted an A/B test to determine the most effective search filters for their users and discovered that users preferred searching by neighborhoods and building types. In NYC, the location of an apartment and the type of building it’s in can significantly influence a potential buyer’s or renter’s decision. For example, someone accustomed to living in Tribeca might not be interested in an apartment in Chinatown, even if it meets all their other criteria.

In addition to search filters, the visual representation of listings is also crucial for potential buyers. Visitors spend approximately 60% of their time looking at listing photos, making the size of these images a significant factor in user engagement and conversion rates.

Moreover, the description accompanying a listing can sway a potential buyer’s decision. Zillow conducted a study analyzing 24,000 home sales and discovered that certain words in the listing descriptions led to homes selling at higher prices than expected. Words like “luxurious,” “landscaped,” and “upgraded” were found to boost conversion rates.

Get 111 Landing Page Examples—The Ultimate Guide for FREE

4. Mobile App A/B Testing Example

The fourth of our A/B testing examples involves a mobile app, given the increasing trend of mobile app optimization. AppQuantum and Red Machine collaborated on an extensive A/B testing campaign for their Doorman Story app, which is a time management simulation game where players manage their own hotel. The example was described in the “How to Conduct A/B Tests in Mobile Apps: Part I” article published on Medium’s website.

The AppQuantum team decided to test whether introducing a paid game mechanic, specifically a chewing gum machine, could be a lucrative monetization strategy. This would mean selling existing game mechanics that other games typically offer for free. They introduced this unique paid game mechanic in a specific set of levels, making sure it did not disrupt the overall game balance.

The purpose of the A/B test was to gauge how valuable this new mechanic was to players. They introduced a paywall to measure user reactions and to determine if the purchase was deemed valuable. However, the primary risk was that players might be deterred by having to pay for tools that were previously free.

After conducting the A/B test, the results showed that the most simplified mechanic was the most successful. This unexpected outcome emphasized the importance of A/B testing in mobile apps, showing that sometimes the simplest solutions can yield the best results.

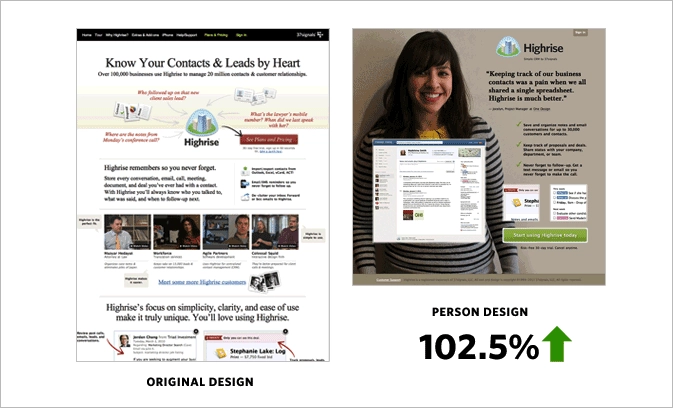

5. Email Marketing A/B Testing Example

Intriguing A/B testing examples come from MailerLite, described in Jonas Fischer’s article “A/B testing email marketing examples to improve campaigns, landing pages and more”. The MailerLite team conducted several A/B tests over the years to determine the effectiveness of using emojis in email subject lines, the length of subject lines, starting the subject line with a question, positioning of images and GIFs, and testing automation.

Let’s examine the first two options to gain deeper insights into email marketing A/B testing. Initially, the MailerLite team tested emojis in their subject lines, and the results were not very promising. In 2020, the open rate for a subject line with emoji was 31.82%, while without emoji, it was 31.93%. However, as time progressed, they conducted more A/B split tests and consistently found that emojis in the subject line were more effective for their audience. Recent tests have shown that emojis in the subject line have a significant positive impact on open rates. The open rate for a subject with emoji was 37.33%, compared to 36.87% without emoji.

Testing subject line length also showed that it can influence email engagement. MailerLite found that concise subject lines were more effective in driving clicks for their subscribers. The test showed that shorter subjects can achieve a 100% open rate and an 85.71% click rate.

6. E-commerce A/B Testing Example

The article titled “The Battle of Conversion Rates — User Generated Content vs Stock Photos” by Tomer Dean delved into the effectiveness of user-generated content (UGC) versus stock photos in the realm of e-commerce. An extensive A/B testing campaign primarily revolved around fashion and apparel items, comparing images of real people wearing products against professionally-shot stock photos.

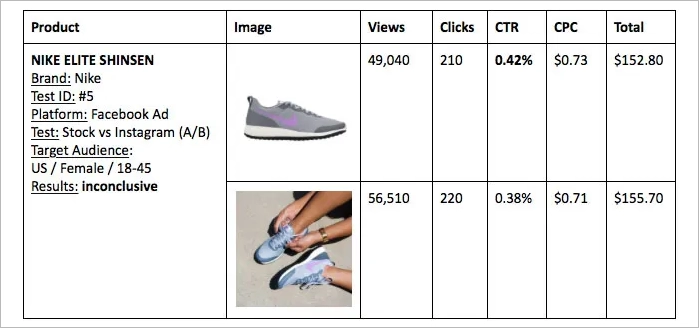

In one of the tests, a Nike sports bra’s stock image was pitted against a user-generated image from Instagram. The results were intriguing, with the UGC image achieving a conversion rate of 0.90% compared to the stock photo’s 0.31%. Similar tests were conducted for other products, such as a Zara skirt and Nike running shoes, with varying results. In another experiment involving red high heels, a landing page displaying the stock image alongside three UGC images significantly outperformed the page with only the stock image.

The A/B tests underscored the potential of UGC in enhancing conversion rates. However, the article also emphasized the importance of continuous testing and adhering to copyright norms when sourcing images.

What is even more fascinating is that e-commerce sites generate an average revenue of $3 per unique visitor, and a successful A/B test typically boosts this figure by up to 50%, as illustrated in Smriti Chawla’s article “CRO Industry Insights from Our In-App Survey Results” on VWO’s website.

Can A/B testing be applied to every element on a website?

While A/B testing can be applied to any element on a website, more crucial is prioritizing and focusing on elements that significantly impact user behavior and conversion rates. By systematically testing and optimizing key elements, businesses can maximize the benefits of A/B testing and make data-driven decisions that lead to improved user experience and increased revenues.

Is there a risk of alienating users with frequent A/B tests?

If not planned and executed carefully with user experience and preferences in mind, frequent A/B tests could potentially alienate users. It’s important to strike a balance between testing and maintaining a consistent user experience.

What is a control group in A/B testing?

In A/B testing, a control group refers to the original version of a webpage or app, which serves as a baseline for comparison against the variation. The control group serves as a reference point for assessing the impact of changes made in the test version.

What constitutes a statistically significant result in A/B tests?

Statistically significant results in A/B tests indicate a high level of confidence that the observed difference between the control and variation is not due to chance. The level of statistical significance is typically set at 95%, which means that there is only a 5% chance that the observed difference is due to randomness.

What percentage of A/B/x tests fail?

According to CRO Industry Insights by Smriti Chawla from VWO, about 14% of A/B tests are statistically significant winning tests, meaning that 86% fail to improve conversion rates. Convert.com’s research yielded similar results on the impact on conversion. However, the failure rate can vary depending on the industry and the complexity of the test.

What are the popular tools used in A/B testing?

There are several popular A/B testing tools available to help businesses optimize their digital presence, such as:

- Optimizely

- VWO

- Google Optimize

- Adobe Target

- AB Tasty

These tools offer various features and functionalities that allow businesses to design, manage, and analyze A/B tests effectively.

Which has a more profound impact on user behavior: changing CTA color or its text?

The CTA text is often more critical as it directly communicates the desired action. However, both color and text can impact user behavior. According to a study, “The Button Color A/B Test: Red Beats Green” conducted by Joshua Porter at Hubspot, altering the color of a call-to-action button can result in a 21% increase in conversions. On the other hand, Melanie Deziel’s research “How to Build and Optimize CTA Buttons That Convert” suggests that personalized Call To Action text can improve conversion rates by up to 202%. That said, while changing the color palette can make the CTA button more visually appealing, altering the text can significantly impact user behavior and lead to higher conversions.

Which industries benefit the most from A/B testing?

Industries that benefit the most from A/B testing include:

- E-commerce

- SaaS

- Digital marketing

- Mobile app development

Businesses in these industries can significantly enhance their digital presence and achieve growth by implementing split test for optimizing user experience, improving conversion rates, and enabling data-driven decisions.

What is A/B testing in the marketing example?

A/B testing in marketing involves comparing different marketing strategies, ad creatives, or messaging to determine which one drives better results. By systematically testing different variations of marketing campaigns, businesses can identify which approaches resonate with their target audience and result in higher engagement and conversions. Additionally, running more tests allows for a more comprehensive understanding of what works and what doesn’t, thereby enabling more accurate and effective marketing decisions.

What is an example of A/B testing on social media?

A/B testing on social media consists of comparing different ad creatives, targeting options, or post formats. For example, a business could test two different images for a Facebook ad, along with different targeting options, to identify which combination results in higher engagement and conversions.

Why do we use A/B testing?

A/B testing is used to facilitate data-driven decisions, optimize user experience, and enhance conversion rates. By systematically testing different variations of webpages, apps, and marketing campaigns, marketers can fine-tune their campaigns, maximize their return on investment, and ensure they stay ahead of the competition and achieve growth.

Is A/B testing a KPI?

A/B testing can be considered a KPI as it helps measure the effectiveness of changes made to a website or app and their impact on user behavior and conversions. By systematically testing different variations and analyzing the results, businesses can identify the most effective strategies and elements that drive conversions.

What is an example of A/B testing in real life?

Real-life examples of A/B testing include testing different pricing strategies, product packaging, or store layouts to optimize sales and customer satisfaction. By comparing different versions of these variables, companies can identify which changes lead to higher sales and a better customer experience.

What are A/B samples?

A/B samples refer to the two versions (control and variation) of a webpage or app being tested. By comparing the performance of these samples, you can identify which version performs better and optimize your digital presence.

How many companies use A/B testing?

According to a 2020 CXL Institute survey, “State of Conversion Optimization”, approximately 44% of companies employ A/B testing software. This survey was conducted among 333 companies of various sizes, from small startups to huge corporations, across a range of industries.

The use of A/B testing is more prevalent among companies with a strong online presence, such as e-commerce and SaaS businesses. For instance, a 2019 Econsultancy Conversion Rate Optimization Report revealed that 77% of companies that have a structured approach to improving conversion rates use A/B testing to some extent.

How does Netflix use A/B testing?

Netflix uses A/B testing to improve its user experience by testing different user interfaces, recommendation algorithms, thumbnails for shows and movies, and more. The goal is to determine which variations users prefer and which lead to more engagement and longer viewing times.

Does YouTube have A/B testing?

YouTube does not provide A/B testing tools for creators. However, YouTube itself conducts A/B testing on its platform to optimize user experience and features. If creators want to conduct A/B testing on their content, they would need to use external tools or methods.

Does Shopify have A/B testing?

Shopify does support A/B testing, but it doesn’t have a built-in feature for it. However, there are several third-party apps available in the Shopify app store that allow running tests.

Can Mailchimp do A/B testing?

Yes, Mailchimp does offer A/B testing features. It allows users to test different subject lines, content, and send times to optimize email campaigns.

Can Wix do A/B testing?

Wix does not have a built-in A/B testing feature, but third-party tools like Google Optimize can be integrated for A/B testing on Wix websites. Businesses using Wix can conduct A/B tests to optimize their websites, enhance user experience, and boost conversion rates by leveraging these tools.

Does Google do A/B testing?

Yes, Google frequently conducts split testing to evaluate changes and improvements to its products, services, and algorithms. This helps Google ensure that any new features or changes provide a positive user experience and meet their intended goals.

How to do A/B testing with Google Ads?

To conduct A/B tests with Google Ads, follow these four steps:

- Identify the element you wish to test (ad copy, landing page, keywords, etc.).

- Create two versions of your ad, one with the original element and one with the modified element.

- Run both ads simultaneously for a set period.

- Analyze the performance data of both ads to determine which version delivers better results.

How to conduct an A/B test in Excel?

You can conduct an A/B test in Excel by organizing your data into two groups: A (control) and B (test). Calculate the mean and standard deviation for both groups. Then, use Excel’s t-test function to compare the means of the two groups and determine if there’s a statistically significant difference.

How much data is needed for the A/B test?

Factors such as the desired level of statistical significance, the expected effect size, and the traffic to the tested page or app influence the amount of data needed for an A/B test. A larger sample size is generally recommended for a more reliable test, with some industry specialists suggesting a minimum sample size of 100 conversions per variation.

The specific context and goals of the A/B test should also be taken into account when determining the appropriate amount of data needed.

Is A/B testing expensive?

The cost of A/B testing depends on the tools you use, the complexity of the tests, and whether you’re conducting them in-house or outsourcing. Some online tools offer free basic services, while others may charge a monthly fee.

Conclusion

A/B testing is not just a tool but a mindset of continuous improvement and adaptation. It empowers marketers to make informed decisions, backed by data, to refine their strategies and achieve optimal results. Whether it’s tweaking a CTA button, experimenting with landing page designs, or testing email campaign variations, A/B testing is an invaluable asset in the marketer’s toolkit. As we delve deeper into the digital age, the role of split testing in shaping user experiences and driving business success becomes increasingly paramount.

Platforms like Landingi have democratized access to this powerful tool, enabling businesses of all sizes to conduct insightful experiments. With Landingi, you can create a great campaign and optimize it with multivariate tests, campaign scheduler, dynamic text replacement, and personalization options to achieve the best results. The most exciting thing is that you can begin using the Landingi platform for free!